A NEW SUFFICIENT CONDITION FOR SPARSE RECOVERY WITH MULTIPLE ORTHOGONAL LEAST SQUARES*

Haifeng LI (李海峰) Jing ZHANG (張靜)

Henan Engineering Laboratory for Big Data Statistical Analysis and Optimal Control,College of Mathematics and Information Science,Henan Normal University,Xinxiang 453007,China

E-mail:lihaifengxx@126.com;a1293651766@126.com

Abstract A greedy algorithm used for the recovery of sparse signals,multiple orthogonal least squares (MOLS) have recently attracted quite a big of attention.In this paper,we consider the number of iterations required for the MOLS algorithm for recovery of a Ksparse signal x∈Rn.We show that MOLS provides stable reconstruction of all K-sparse signals x from iterations when the matrix A satisfies the restricted isometry property (RIP) with isometry constant δ7K≤0.094.Compared with the existing results,our sufficient condition is not related to the sparsity level K.

Key words Sparse signal recovery;multiple orthogonal least squares (MOLS);sufficient condition;restricted isometry property (RIP)

1 Introduction

The orthogonal least squares (OLS) algorithm[1–11]is a classical greedy algorithm for recoveringK-sparse signal x∈Rnfrom

where x has at mostKnonzero entries (i.e.,‖ x ‖0≤K),A∈Rm×n(m?n) and w is a noise vector.OLS identifies the support of the underlying sparse signal by adding one index to the list at a time,and estimates the sparse coefficients over the enlarged support.Specifically,it adds to the estimated support an index which leads to the maximum reduction of the residual power in each iteration.The vestige of the active list is then eliminated from y,yielding a residual update for the next iteration.See[9]for a mathematical description of OLS.

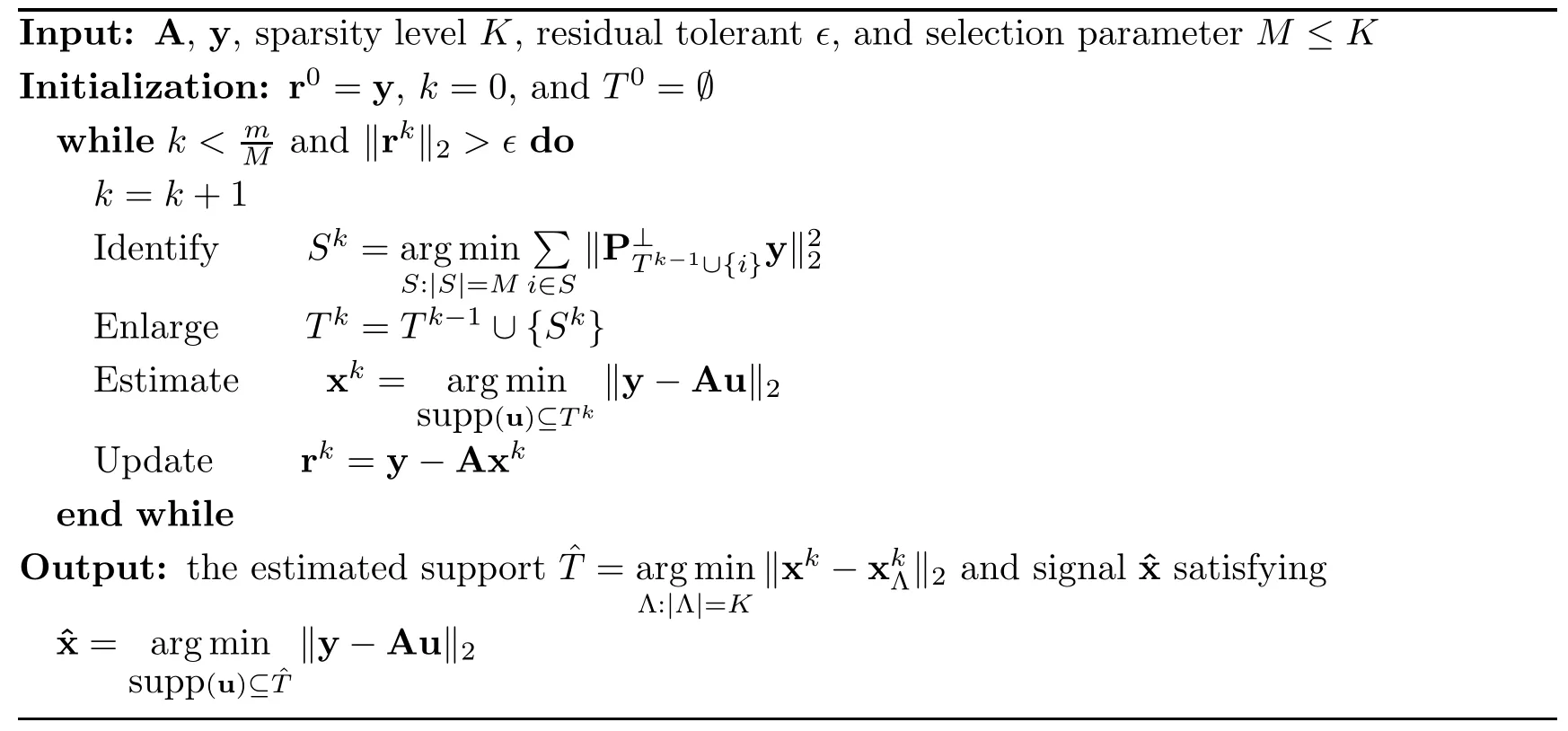

In[6]and[9],we observe that the OLS algorithm has a better convergence property,while it is computationally more expensive than OMP (orthogonal matching pursuit)(see[12–17]).The OLS algorithm has attracted much attention over the course of last several years.Recently,many efforts have also been made to study an extension of OLS,referred to as multiple orthogonal least squares (MOLS)(see[6,7]).Compared to OLS,MOLS selectsMindices at a time (see Table 1),which reduces the computational complexity and greatly improves the computational speed.An important challenge is to characterize the exact recovery conditions of MOLS using the properties of measurement matrices such as the restricted isometry property (RIP).The definition of RIP is as follows:

Table 1 The MOLS algorithm

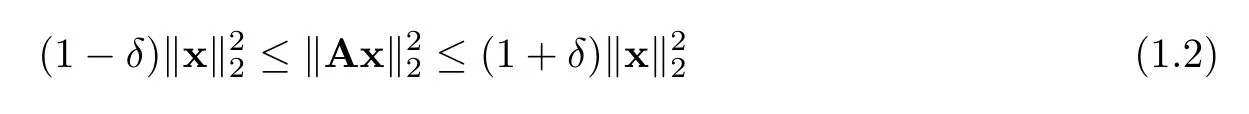

Definition 1.1([18]) A measurement matrix A satisfies the RIP of orderKif there exists a constantδ∈(0,1) such that

holds for allK-sparse vectors x.The minimumδsatisfying (1.2) is defined as the restricted isometry constant (RIC) constantδK.

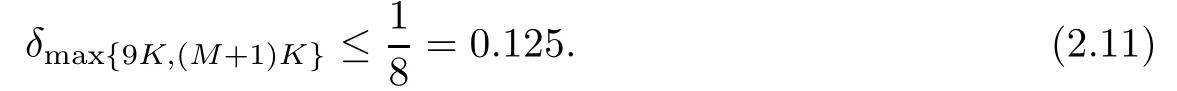

At present,most studies indicate that MOLS recoversK-sparse signals in at mostKiterations.For example,in[6],it was shown that MOLS recoversK-sparse signals in at mostKiterations under

In[7],the condition was improved to

Recently,Kim and Shim[8]presented a near-optimal restricted isometry condition (RIC) of MOLS as follows:

One can conclude from (1.3)–(1.5) that exact recovery with MOLS can be ensured when RIC is inversely proportional to

In this paper,we go further,and study the performance analysis of the MOLS algorithm.More specifically,as is shown in Corollary 2.3,MOLS achieves stable recovery of theK-sparse signal x fromiterations with

It is important to stress the novel aspects of our contribution.The works[18,19]claimed that a random matrix A∈Rm×nwith entries drawn i.i.d.from Gaussian distributionobeys the RIP withδK≤∈with overwhelming probability if

The number of required measurements is.On the other hand,our condition (1.6) requires that;this is obviously smaller than the previous results,in particular for largeK.

The rest of this paper is organized as follows:in Section 2,we present some observations and our main results.In Section 3,we provide some technical lemmas that are useful for our analysis and prove Theorem 2.2.Finally,we summarize our results in Section 4.

Notation:Denote Ω={1,...,n}.LetT=supp (x)={i|xi0,i∈Ω}be the support of aK-sparse vector x (i.e.,the set of the positions of itsKnonzero elements).Let Λ be a subset of Ω and let|Λ|be the cardinality of Λ.TΛ={i|i∈T,i/∈Λ}.xΛ∈Rndenotes the vector equal to x on an index set Λ and zero elsewhere.Throughout the paper,we assume that A∈Rm×nis normalized to have a unit column norm (i.e.,‖ Ai‖2=1 fori=1,2,...,n).1[20]has shown that the behavior of OLS is unchanged whether columns of A are normalized or not.As MOLS is a direct extension of OLS,one can verify that the normalization does not matter either for the behavior of MOLS.Let AΛ∈Rm×|Λ|be a sub-matrix of A with an index of its columns in set Λ.For any matrix AΛof full column-rank,letbe the pseudo-inverse of AΛ,wheredenotes the transpose of AΛ.stand for the projector and orthogonal complement projector,respectively,onto span (AΛ)(i.e.,the column space of AΛ).

2 Sparse Recovery With MOLS

2.1 Observations

Before giving the details of Theorem 2.2,we obtain an important observation on the MOLS algorithm.As shown in Table 1,in the (k+1)-th iteration (k≥0),MOLS adds toTka set ofMindices that results in the maximum reduction of the residual power,i.e.,

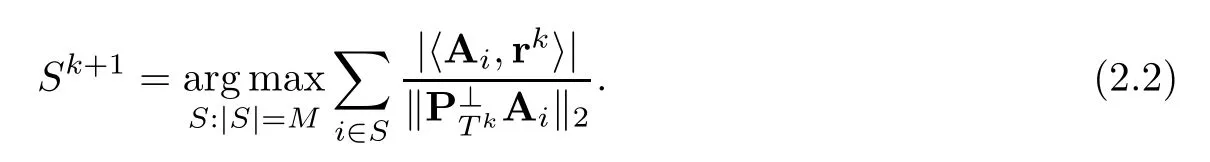

We intuitively observe that it requires the construction ofn-Mkdifferent orthogonal projections (i.e.,) to identifySk+1.This implementation of MOLS is,however,computationally expensive.In order to solve this problem,inspired by the technical report[20],Wang and Li[6]presented a cost-effective alternative to (2.1) for the identification step of MOLS.The result is given in the following lemma:

Lemma 2.1([6],Proposition 1) Consider the MOLS algorithm.At the (k+1)-th iteration,the MOLS algorithm selects the index

We can see from (2.2) that it suffices to find theMlargest values in,which is much simpler than (2.1),as it involves only one projection operator.By numerical experiments,we have indeed con firmed that the simplification offers a massive reduction in the computational cost.Hence,Lemma 2.1 plays an important role in analyzing the recovery condition of MOLS.

Moreover,note that the identification rule of MOLS is akin to that of a generalized orthogonal matching pursuit (gOMP).Specifically,in the (k+1)-th iteration,gOMP picks a set ofMindices corresponding to the column which is most strongly correlated with the signal residual,i.e.,

Clearly,the rule of MOLS differs from that of gOMP only in that it has an extra normalization factorThus,the greedy selection rule in MOLS can also be viewed as an extension of the gOMP rule.This argument has been verified (see[6,20,21]).However,this property shows that the rule of MOLS coincides with that of gOMP only in the first iteration becauseS0=? leads toFor the subsequent iterations,MOLS does make a difference,since?k≥1.In fact,as will be seen later,this factor makes the analysis of MOLS different and more challenging than that of gOMP.

2.2 Main Results

Theorem 2.2Let y=Ax+w be the noisy measurement,where x∈Rnis anyK-sparse signal supported onT,A∈Rm×nis a measurement matrix with?2-normalized columns,and w is a noisy vector.Letθk=|TSk|be the index number of a remaining support set after performingk(k≥0) iterations of MOLS.If A obeys the RIP with the isometry constant

ProofSee Section III. □

We observe from Theorem 2.2 that MOLS requires at mostadditional iterations after runningk(k≥0) iterations to ensure that the condition in (2.4) is fulfilled.In other words,the?2-norm of the residual is upper bounded by the product of a constant and ‖ w ‖2.

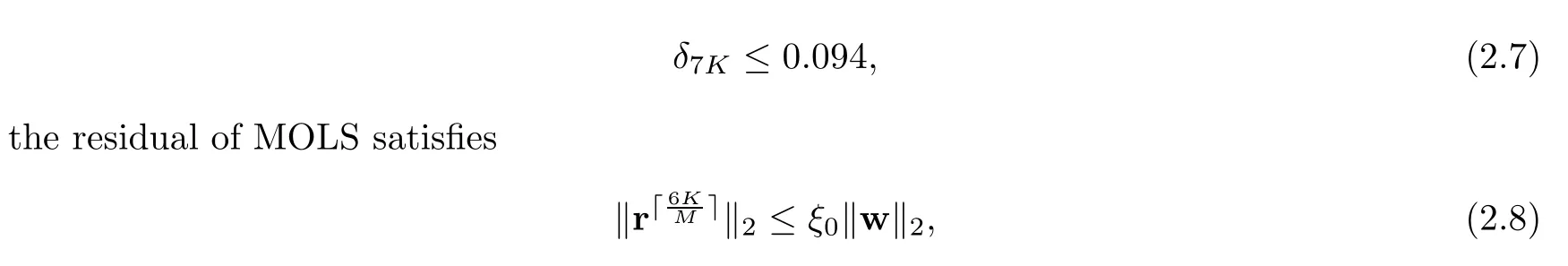

In particular,whenk=0 andθ0=|TS0|=Kin (2.4),we obtain that the?2-norm of the residual falls belowξ0‖ w ‖2.The result is as follows:

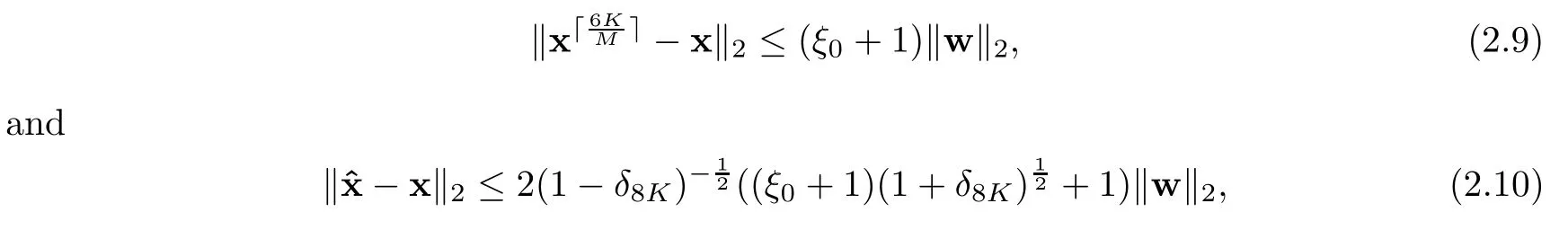

Corollary 2.3Let x be anyK-sparse signal and let A be a matrix with?2-normalized columns.If A satisfies the RIP with

whereξ0has been defined in (2.5) withk=0.

Next,we show that the?2-norm of the recovery error is also upper bounded by the product of a constant and ‖ w ‖2.

Theorem 2.4Let x∈Rnbe anyK-sparse signal,let A∈Rm×nbe a matrix with?2-normalized columns,and let y=Ax+w be the noisy sampling model.If A obeys RIP with (2.7),MOLS satisfies

whereξ0has been defined in (2.5) withk=0.

ProofSee Appendix A. □

Remark 2.5(Comparison with[8]) In[8],the authors showed that the MOLS algorithm ensures the accurate recovery of anyK-sparse signal,provided that A satisfies the restricted isometry property (RIP) with (1.5),which is the best existing result for MOLS.However,the sufficient condition (1.5) is inversely proportional toit will vanish asKincreases.Our sufficient condition (2.7) is upper bounded by a constant.

Remark 2.6(Comparison with[22]) The authors in[22]claimed that gOMP can exactly recover the supports of allK-sparse vectors from the samplesiterations if A satisfies the RIP with

It is easy to see that the number of iterative steps in our results are less than those of[22].

Remark 2.7(Comparison with[23]) In[23],the authors claimed that MOLS ensures the exact recovery of anyK-sparse vector initerations under≤0.155.According to (2.8),our results show that MOLS can recover anyK-sparse signals within.Thus,our result is better than that in[23].

Remark 2.8(Comparison with[9,24]) MOLS reduces to OLS whenM=1.From Corollary 2.3,our result indicates that OLS can exactly recover theK-sparse signals x within 6Kiteration with (2.7).The work[9]stated that OLS exactly recovers the support of anyK-sparse vector x with

The reference[24]provides a sufficient condition for OLS:

It is easy to see that the upper bounds of (2.12) and (2.13) are inversely proportional towhich requires thatmshould scale withK2log.On the other hand,the upper bound of the proposed guarantee (2.7) is independent ofK.

3 Proof of Theorem 2.2

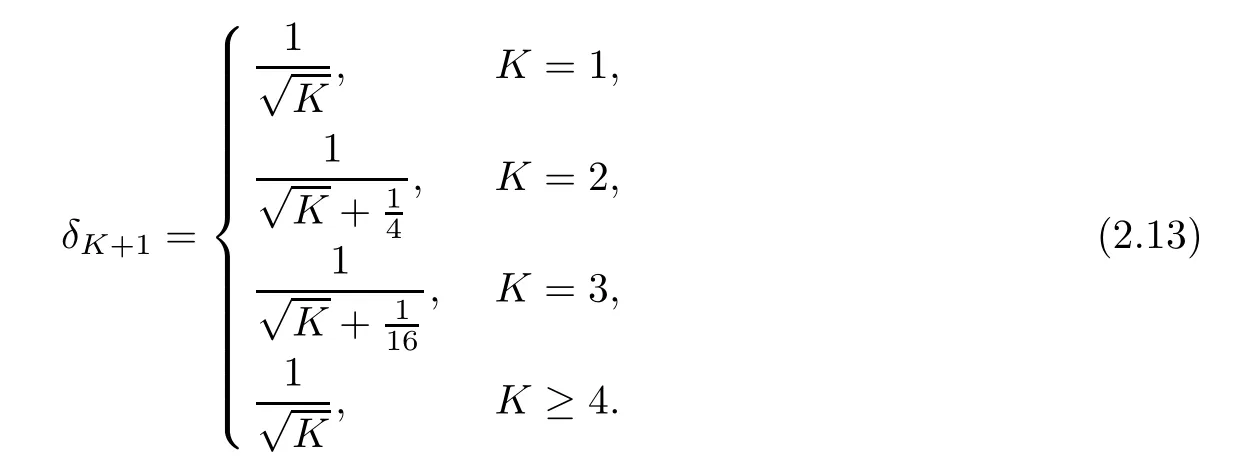

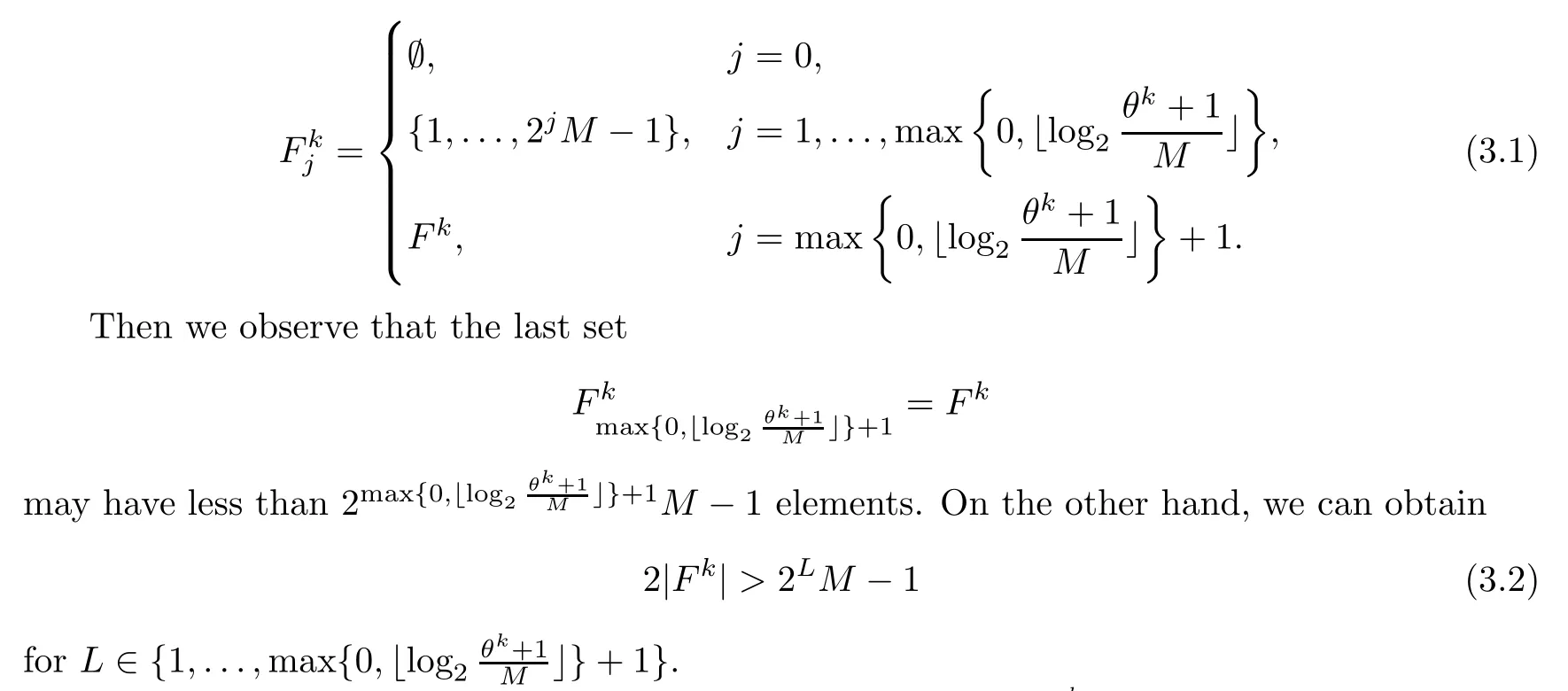

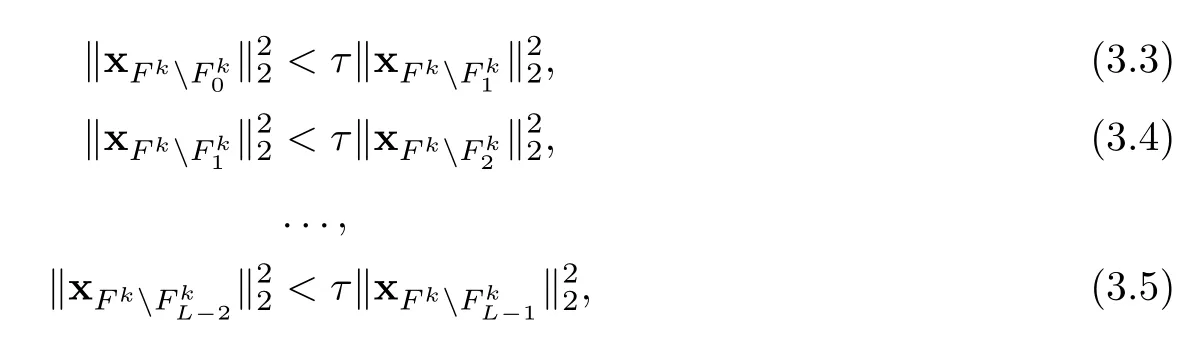

For the proof of the analysis of Theorem 2.2,our idea is related to[22].Here,we first denoteFk=TTkandθk=|Fk|.For notational convenience,assume thatxiis arranged in descending order of their magnitudes,i.e.,|x1|≥|x2|≥···≥|xθk|.Now,we define the subsetofFkas

Next,for constantτ>1,letL∈{1,2,...,maxbe the minimum positive integer satisfying

forj=0,1,...,L.Here we note that if (3.6) holds true for allL≥1,we ignore (3.3)–(3.5) and simply takeL=1.In addition,Lalways exists becauseso that (3.6) holds true at least for

In consideration of the selection rule of MOLS viewed as an extension of the gOMP rule,we will prove Theorem 2.2 by using mathematical induction inθk.In fact,the mathematical induction was proposed in[22].Here,θkstands for the number of remaining indices afterkiterations of MOLS.We first selectθk=0,and then no more iteration is needed,i.e.,T?Tk,so we have

Now we suppose that the conclusion holds up toθk-1,whereθk≥1 is a positive integer.Then,we need to prove that (2.4) holds true,i.e.,

holds true.

In order to prove (3.8),we will choose a decent number of support indices inFk,which must be selected within a specified number of additional iterations.Then the number of remaining support indices is upper bounded.

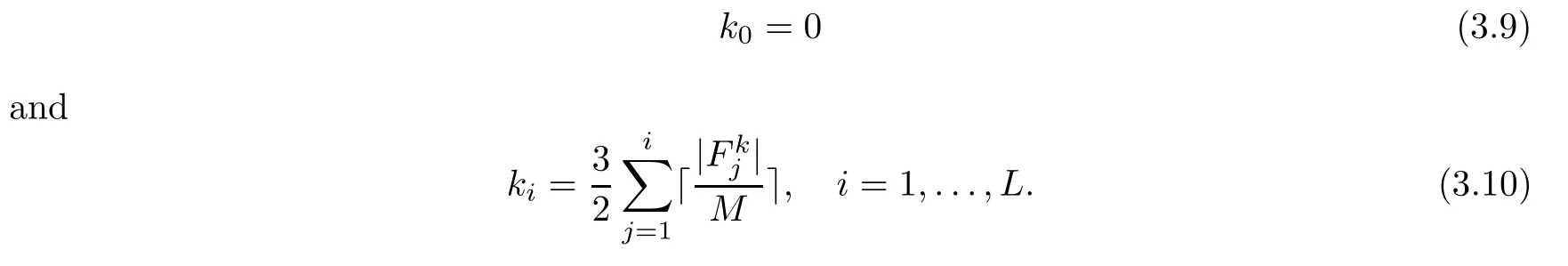

Now we define that

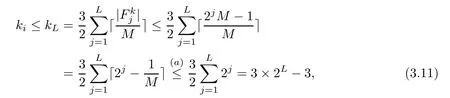

Because of the definition of,we have≤2jM-1 forj=1,...,L,and

where (a) follows from 0<≤1.Let

indicate specified additional iterations after runningkiterations of OLS.

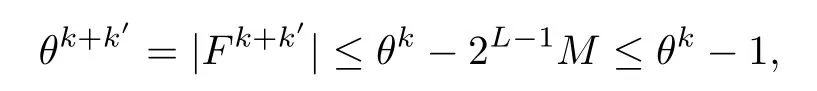

Now if we suppose that the number of remaining support indices satisfies after runningk+k′iterations,then inequality (3.8) holds when we require at mostadditional iterations.Our proof is completed.In fact,the total number of iterations of MOLS is

Since the index number of remaining support is no more thanθk-1,i.e.,

by the induction hypothesis we have that

Then we combine (3.15) with (3.16) and get

Thus,we require it to be ensured that (3.13) holds true.By the definition ofin (3.1),we have

Hence,we need to prove that inequality (3.18) holds true.

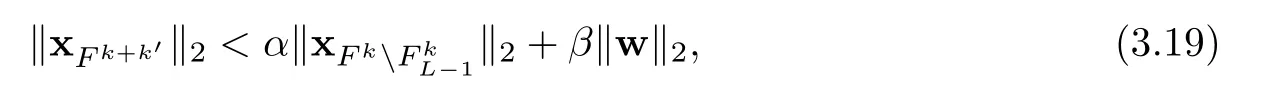

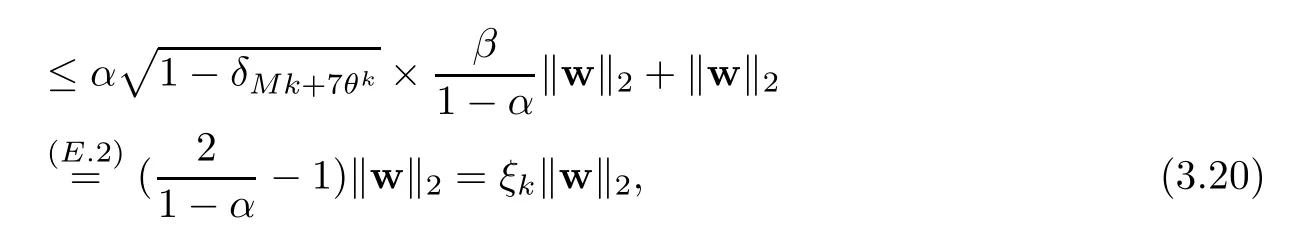

We have,by the result in Proposition E.6(see Appendix E),

whereαandβare defined in (E.1) and (E.2),respectively.

It follows from (2.7) thatα<1.Then we discuss two cases.

where (a) follows from the fact that the residual power of MOLS is non-increasing,and whereξkhas been defined in (2.5).

4 Conclusion

As an extension of OLS,MOLS is effective in reconstructing sparse signals and enhancing recovery performance.In this paper,we have presented an improved recovery guarantee of MOLS,which can stability recoverK-sparse signals from the noisy measurements initerations underδ7K≤0.094.

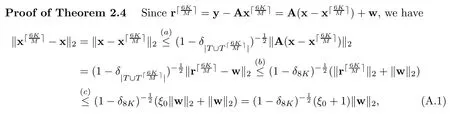

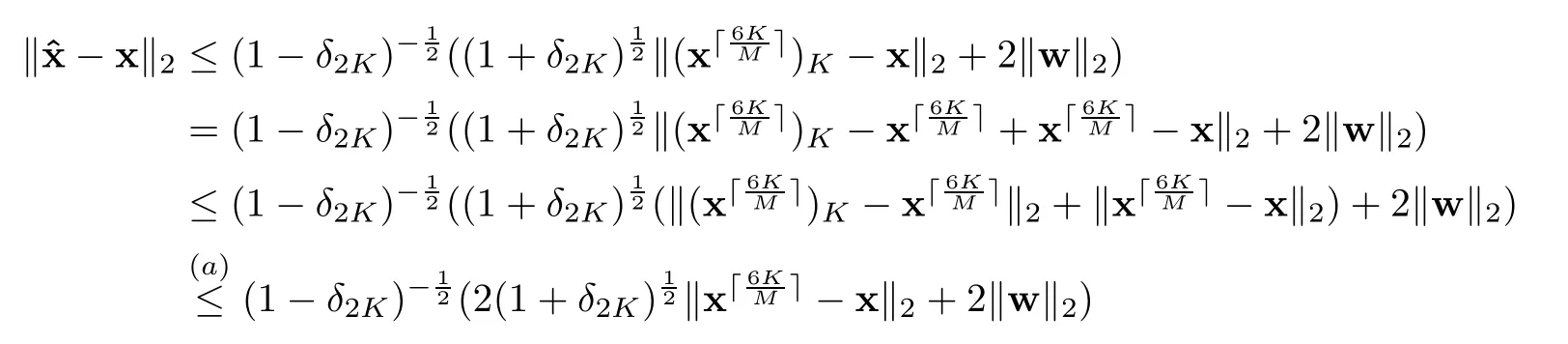

Appendix A

where (a) is based on the RIP,(b) uses the norm inequality and7K+M≤8K,and (c) is according to Corollary 2.3.

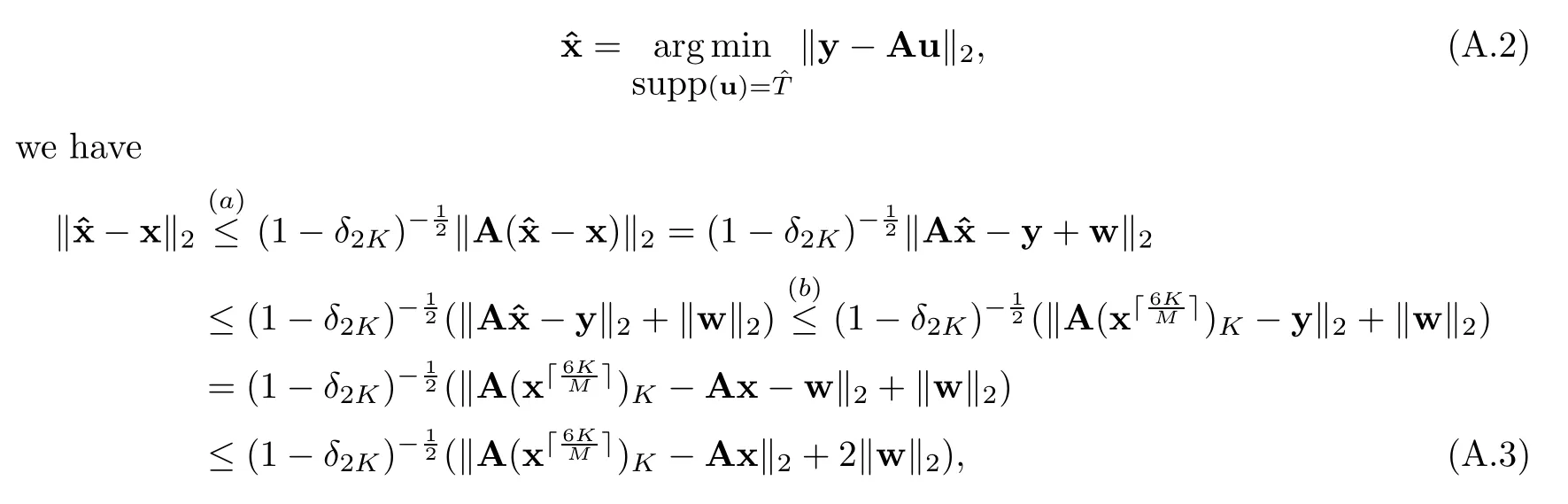

Now,we need to prove that (2.10) is true.Since the bestK-term approximationofis supported onand

where (a) is based on RIP,and (b) is from (A.2).

Using the RIP and the property of norm,(A.3) can be changed into where (a) is becauseis the bestK-term approximation of,and (b) follows from (2.9).□

Appendix B

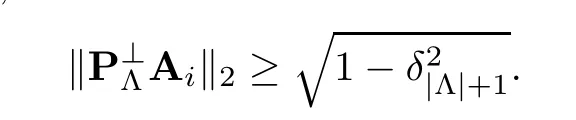

Lemma B.1([9],Lemma 3) Suppose that Λ?Ω and A∈Rm×nsatisfy the RIP of order|Λ|+1.Then,for anyi∈ΩΛ,

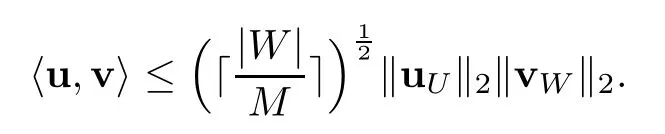

Lemma B.2([22],Lemma 1) Let u,v∈Rnbe two distinct vectors and letW=supp (u)∩supp (v).LetUbe the set ofMindices corresponding to theMmost significant elements in u.For any integerM≥1,we have

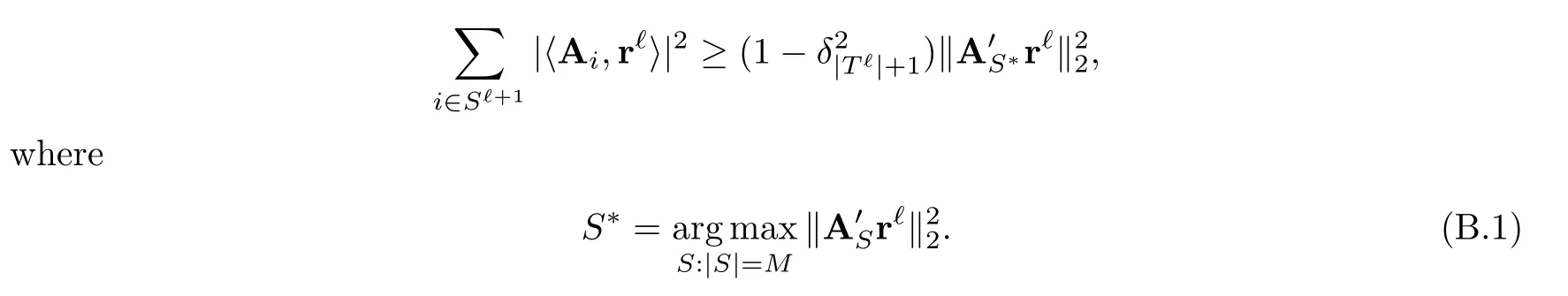

Lemma B.3Let A∈Rm×nbe a matrix with?2-normalized columns.According to Lemma 2.1,for the (?+1)-th (?≥k) iteration of MOLS,we have

ProofNote that this proof technique is similar in spirit to the work of[23,Lemma 8].By Lemma 2.1,we have

(b) follows from Lemma B.1,(c) is from Lemma 2.1,(d) is according toand (e) is based on (B.1). □

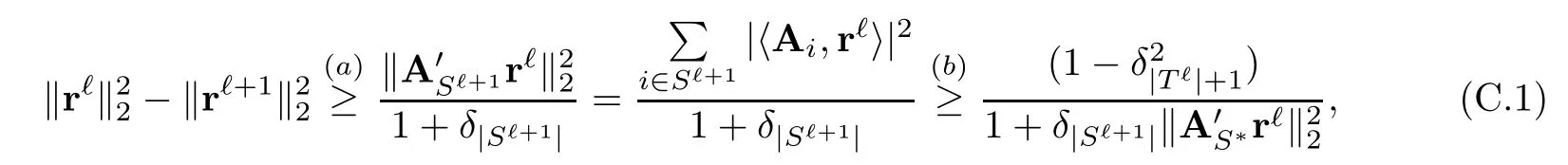

Appendix C

Lemma C.4For the (?+1)-th (?≥k) iteration of the MOLS Algorithm,the residual of MOLS has

ProofNote that this proof technique is similar in spirit to the work of[23,Lemma 8].For any integer?≥k,we have

where (a) follows from[6,(E.4)],and (b) follows from Lemma B.3. □

Appendix D

Lemma D.5Let A∈Rm×nbe a matrix with?2-normalized columns.For the (?+1)-th (?≥k) iteration of MOLS,we have

ProofNote that this proof technique is similar in spirit to the work of[23,Lemma 8].According to Lemma C.4,we only give that

where (a) is because supp (A′r?)∩supp (x?)=? so that〈A′r?,x?〉=0,(b) is according to

This completes the proof. □

Appendix E

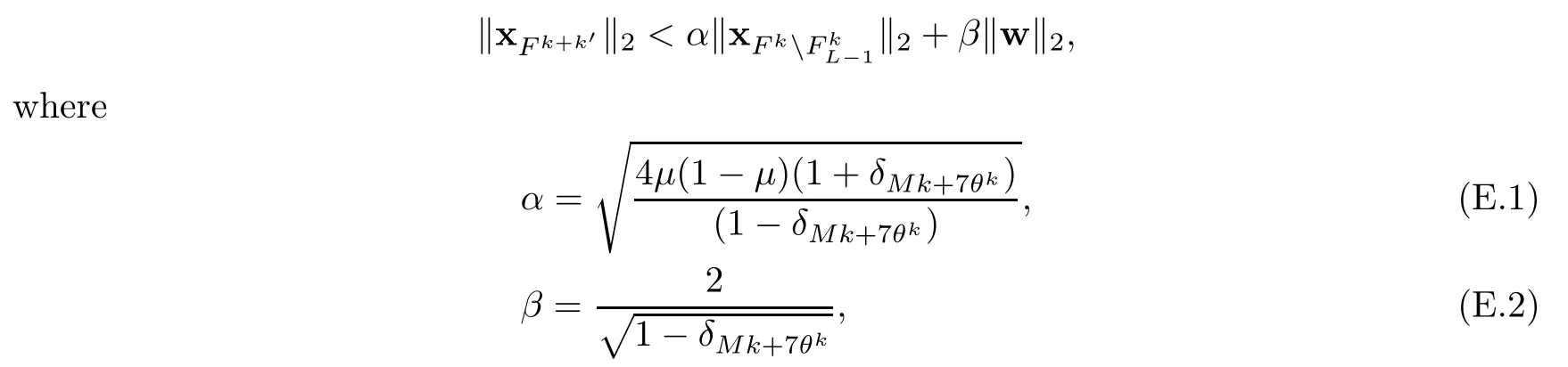

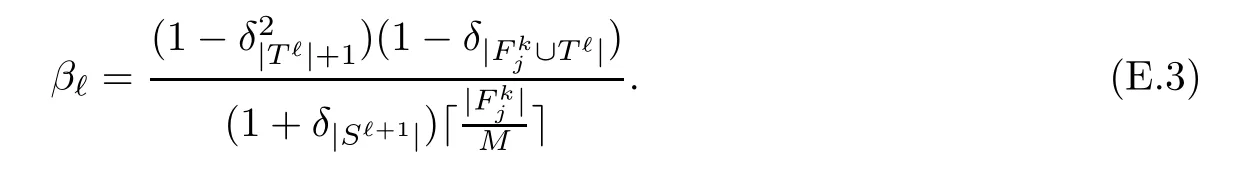

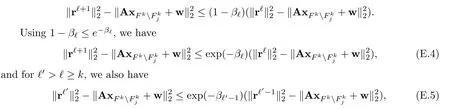

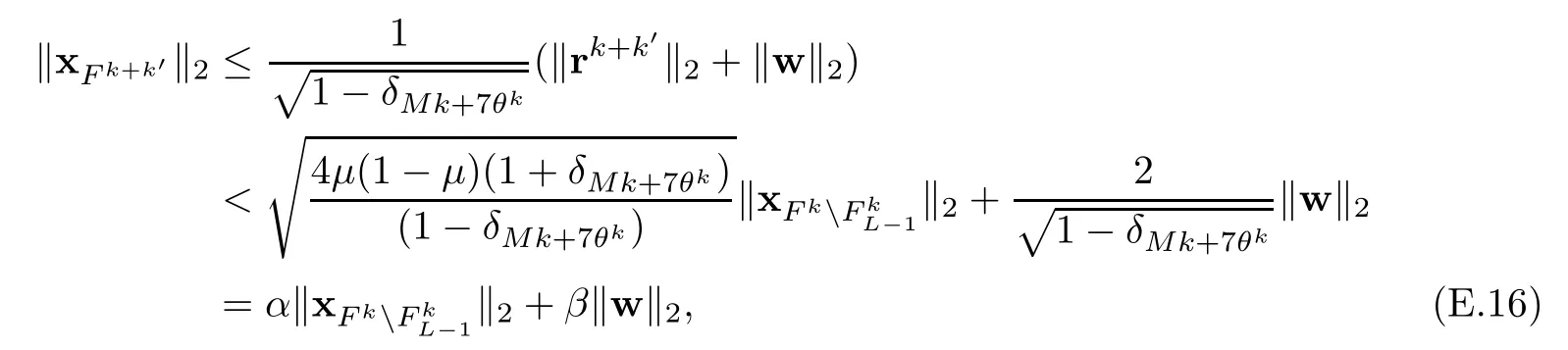

Proposition E.6Let A∈Rm×nbe a matrix.Letθk=|Fk|=|TTk|be the index number of a remaining support set after runningk(k≥0) iterations of MOLS.Let xFk+k′andbe two truncated vectors of x,wherek′indicates specified additional iterations after runningkiterations and.Then we have

andμhas been defined in (2.6).

ProofAccording to (D.1),let

Then,(D.1) can be rewritten as

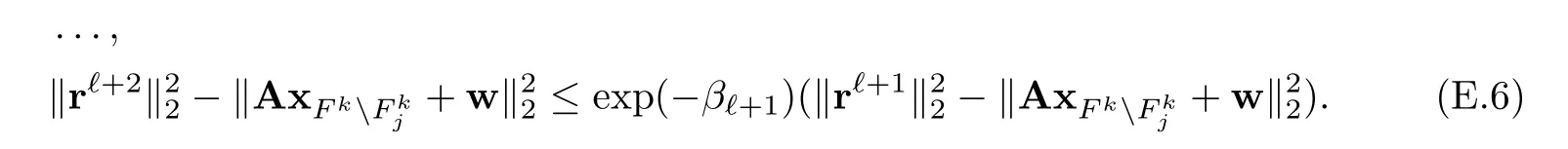

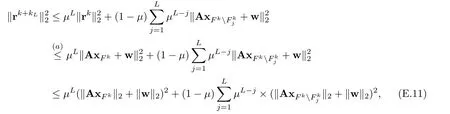

Thus,from (E.4)-(E.6),we have,further,that

where (a) holds,sinceβηis non-increasing.

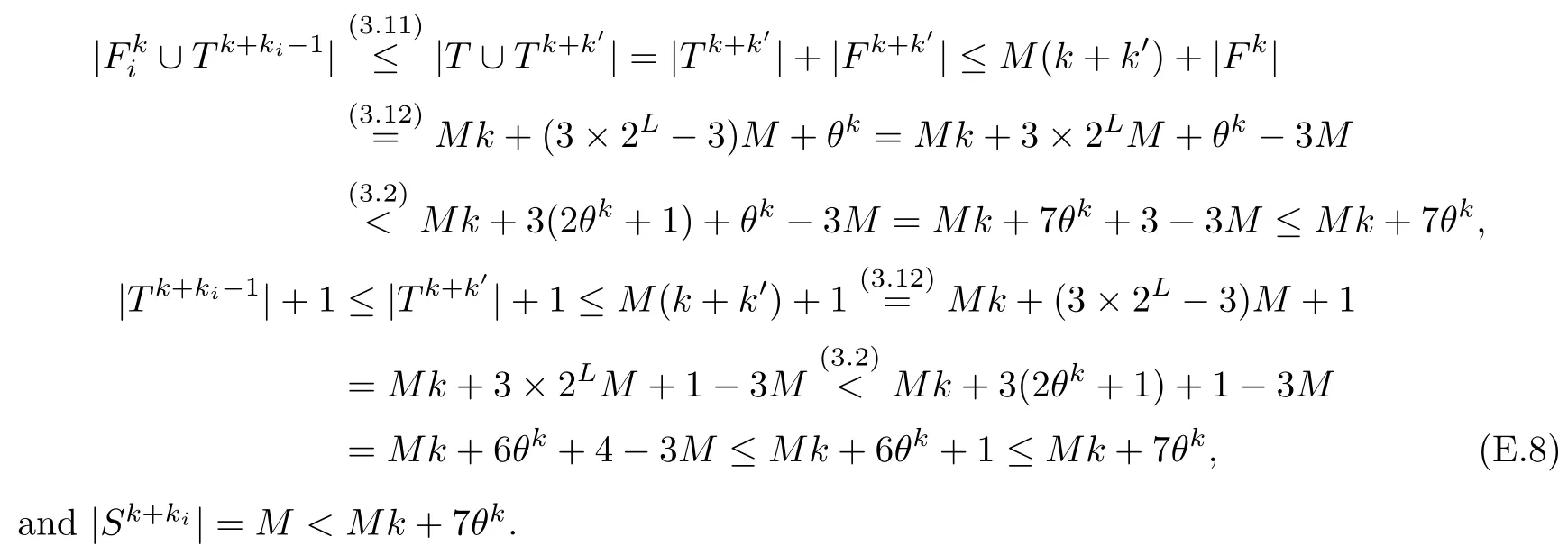

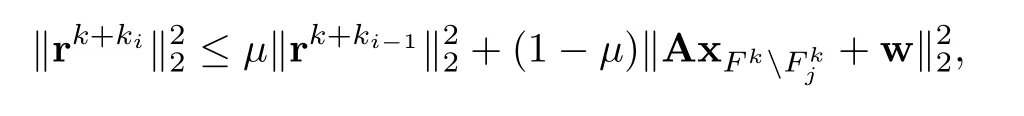

Let?′=k+kiand?=k+ki-1,i=1,...,L.Then we have

where (a) is due to (E.3),(b) is fromj≤i,(c) is true since (3.10),(d) is because

According to (2.6),(E.7) can be rewritten as

wherei=1,...,L.

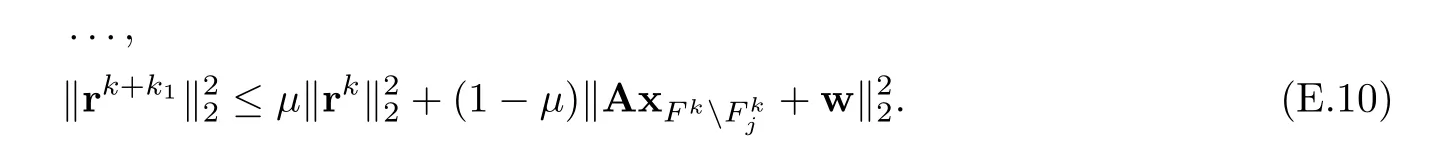

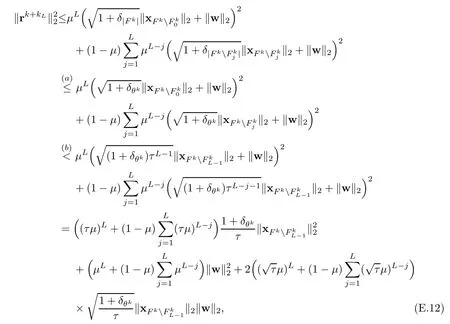

Note thatk0=0.Then we have

From (E.9)–(E.10),we get that

Note that

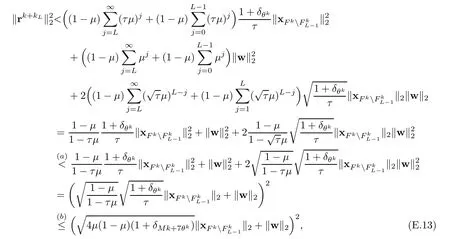

whenτ>1,τμ<1,andμ<1.Thus,(E.12) can be changed into

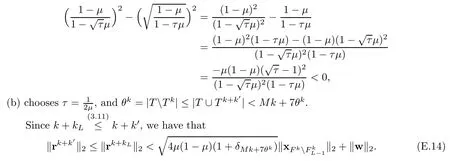

where (a) is from

On the other hand,we have that

where (a) is due to the RIP,and (b) follows from (E.8).

By relating (E.14) and (E.15),we obtain that

whereαandβare as defined in (E.1) and (E.2),respectively. □

Acta Mathematica Scientia(English Series)2022年3期

Acta Mathematica Scientia(English Series)2022年3期

- Acta Mathematica Scientia(English Series)的其它文章

- A ROBUST COLOR EDGE DETECTION ALGORITHM BASED ON THE QUATERNION HARDY FILTER*

- CONTINUOUS SELECTIONS OF THE SET-VALUED METRIC GENERALIZED INVERSE IN 2-STRICTLY CONVEX BANACH SPACES*

- EXISTENCE RESULTS FOR SINGULAR FRACTIONAL p-KIRCHHOFF PROBLEMS*

- BOUNDS FOR MULTILINEAR OPERATORS UNDER AN INTEGRAL TYPE CONDITION ON MORREY SPACES*

- LEARNING RATES OF KERNEL-BASED ROBUST CLASSIFICATION*

- A COMPACTNESS THEOREM FOR STABLE FLAT SL (2,C) CONNECTIONS ON 3-FOLDS*